用python写爬虫

如何用python写一个爬虫可以指定关键词,爬取包含该关键词内容的网页

东西/原料

- ThinkBook15

- Microsoft Windows10.0.19043.1083

- PyCharm2019.2.3

方式/步调

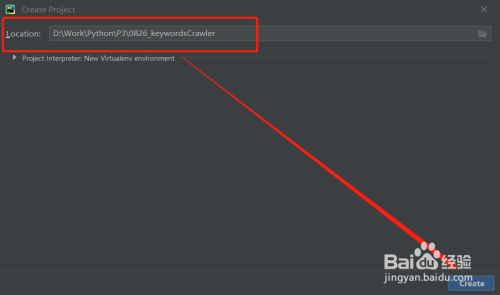

- 1

建立项目,设置项目存储位置

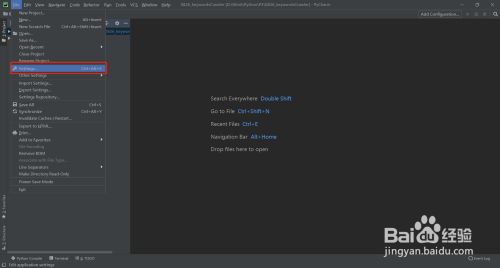

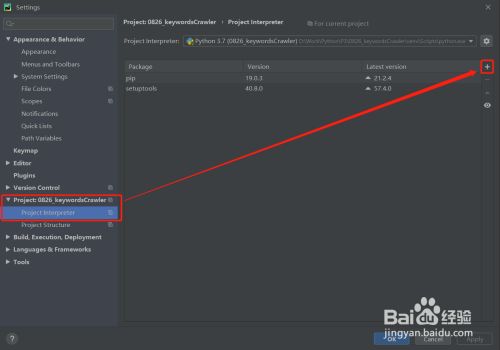

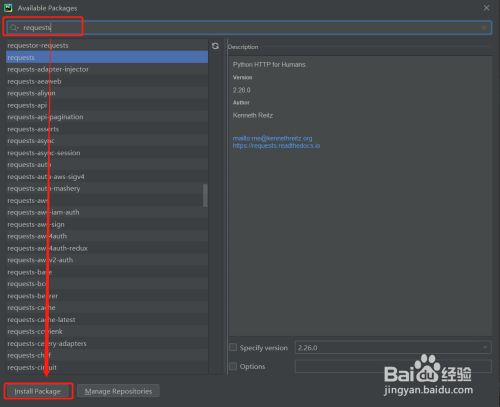

- 2

安装requests模块

- 3

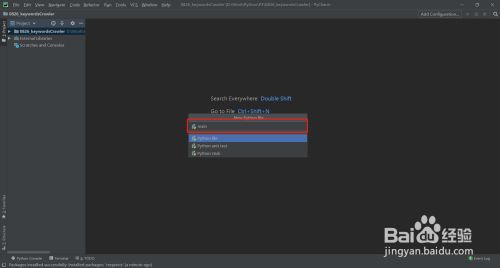

建立py文件

- 4

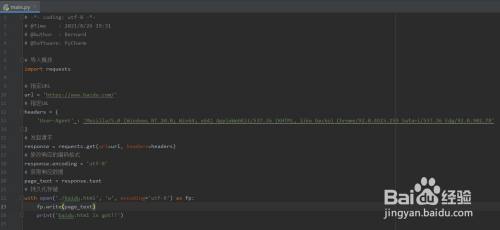

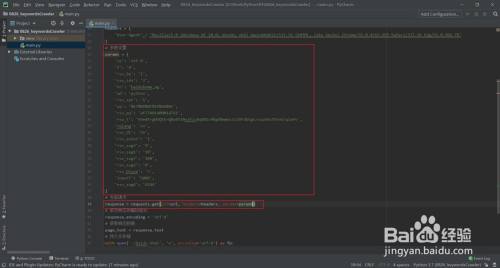

编写根本爬虫框架代码

- 5

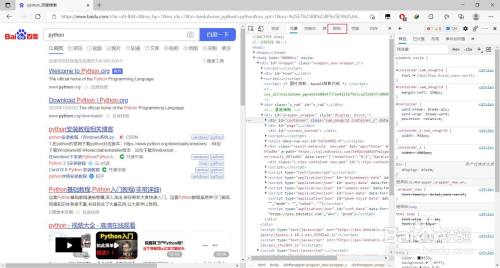

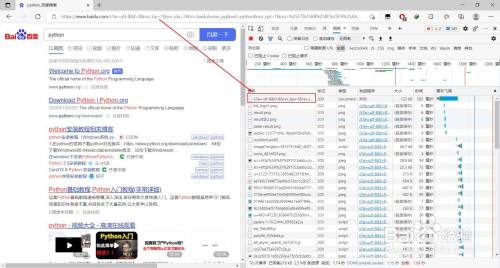

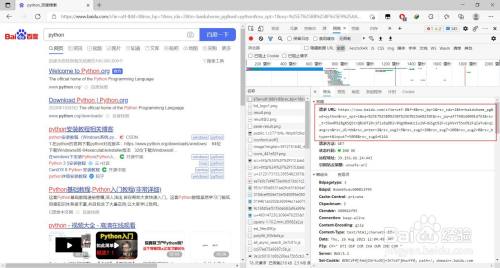

利用Microsoft Edge浏览器拜候百度,并进行关头词搜刮

- 6

在搜刮到的页面中点击鼠标右键,在菜单中点击“查抄”打开浏览器自带的抓包东西

- 7

在抓包东西中选择“收集”标签选项

- 8

利用快捷键Ctrl+R进行刷新

- 9

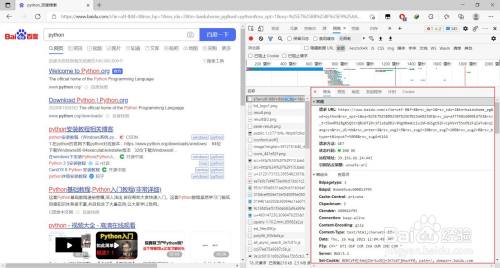

找到名称与请求域名不异的数据包

- 10

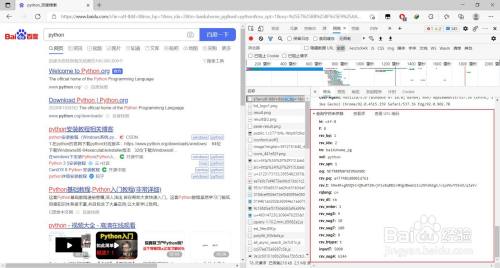

在数据包的“标头”标签选项详情中找到“查询字符串参数”,将此中的内容复制

- 11

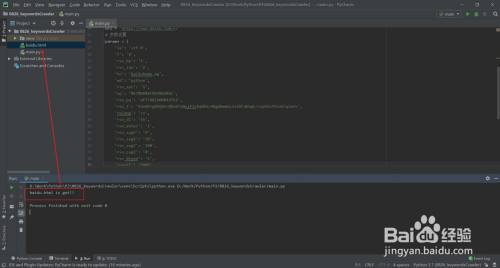

将复制的字符串参数在代码中封装当作字典,并在get()方式中传入params

- 12

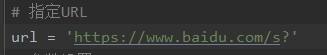

点窜指定的url

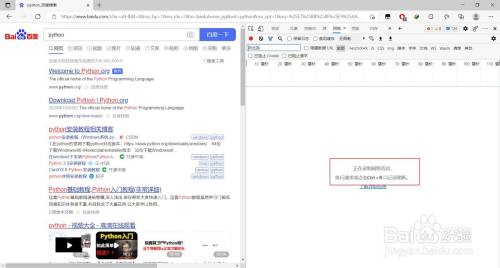

不雅察发现浏览器抓到的数据包中请求URL后半部门其实就是前面找到的那些字符串参数

- 13

运行代码,代码当作功运行,生当作新文件

- 14

打开文件查看,和前面用浏览器搜刮到的页面一样,申明爬取当作功了

- 15

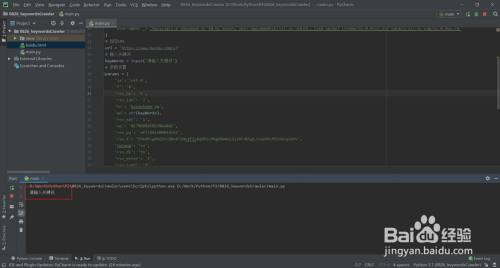

不雅察“字符串参数”中,wd后面的内容即为输入的关头词,是以在代码中将该参数动态化

- 16

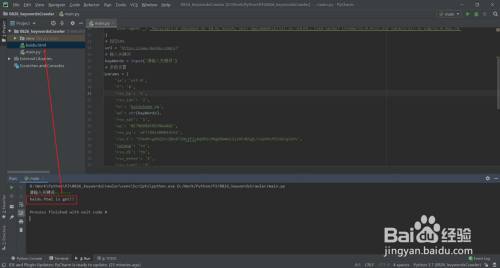

运行代码,键入关头词,运行完当作

- 17

查看baidu.html文件,当作功爬取到所键入关头词相关的搜刮内容

END

END

注重事项

- 分歧浏览器抓包东西界面各有分歧,需要按照具体浏览器矫捷操作

- 发表于 2021-08-28 13:01

- 阅读 ( 489 )

- 分类:电脑网络

你可能感兴趣的文章

- 如何安装python环境 34 浏览

- python 创建文件夹 68 浏览

- python 创建文件夹 67 浏览

- python 字典keys方法的使用 76 浏览

- python 集合中clear方法的使用 79 浏览

- python 集合中clear方法的使用 70 浏览

- python 字典keys方法的使用 80 浏览

- python replace方法的使用 80 浏览

- python 以w+方式打开文件 83 浏览

- python 添加dict元素 76 浏览

- Python 以只读(r)方式打开文件 112 浏览

- python 列表的循环遍历 130 浏览

- python 多维数组如何转化成一维数组 126 浏览

- python 删除dict中的元素 116 浏览

- python 如何取整除 119 浏览

- Python 如何取余运算 116 浏览

- python 字符串如何切片 116 浏览

- python 列表转字典 110 浏览

- python 如何判断元素是否在列表中 114 浏览

- python 列表嵌套 108 浏览

最新文章

- 已连接至DOTA2游戏协调服务器;正在登录中 26 浏览

- 百度下拉框怎么刷 31 浏览

- 如何修复Chrome上ERR_CONNECTION_TIMED_OUT错... 40 浏览

- 教你批量生成自动发卡平台需要的卡密数据 37 浏览

- 西塘旅游攻略 31 浏览

- 智慧书房APP如何申请注册账号 34 浏览

- 悦动圈如何注销账号 35 浏览

- 支付宝如何关闭好友生日提醒 46 浏览

- 夸克如何使用蒙版效果 38 浏览

- 天鹅到家软件中怎么开启相机权限 36 浏览

- 天鹅到家软件中怎么开启位置信息 35 浏览

- 支付宝如何查看通讯录的黑名单 33 浏览

- 抖音转场特效在哪里 138 浏览

- 百度知道如何添加自己擅长的领域 33 浏览

- QQ输入法自定义短语怎么一键清空 23 浏览

- 手机天猫如何设置我的收货地址 32 浏览

- 钉钉如何设置会话收起时间 21 浏览

- wps页脚设置每页不同如何操作 12 浏览

- win10系统怎么修改host文件 15 浏览

- 《小米汽车》如何重置密码 13 浏览

相关问题

0 条评论

请先 登录 后评论

admin

0 篇文章

推荐文章

- 别光看特效!刘慈欣、吴京带你看懂《流浪地球》里的科学奥秘 23 推荐

- 刺客信条枭雄闪退怎么解决 14 推荐

- 原神志琼的委托怎么完成 11 推荐

- 野良犬的未婚妻攻略 8 推荐

- 与鬼共生的小镇攻略 7 推荐

- 里菜玩手机的危害汉化版怎么玩 7 推荐

- 易班中如何向好友发送网薪红包 7 推荐

- rust腐蚀手机版怎么玩 6 推荐

- water sort puzzle 105关怎么玩 6 推荐

- 微信预约的直播怎么取消 5 推荐

- ntr传说手机版游戏攻略 5 推荐

- 一起长大的玩具思维导图怎么画 5 推荐